December 13, 2011 report

Here come the quantum dot TVs and wallpaper

(PhysOrg.com) -- A British firm's quantum dot technology will be used for flat screen TVs and flexible screens, according to the company’s chief executive.

The quantum dots will be in use for ultra thin, light flat screen TVs by the end of next year, and, in another three years, will be used in flexible screens rolled up like paper or used as wall coverings.

The company, Nanoco Group, is reportedly working with Asian electronics companies to bring this technology to market.

“The first products we are expecting to come to market using quantum dots will be the next generation of flat-screen televisions,” Nanoco chief executive Michael Edelman has stated.

Nanoco describes itself as the “world leader in the development and manufacture of cadmium-free quantum dots.” While quantum dots technology is not new, the scientists at Nanoco are succeeding in their goals toward mass production. Earlier this year, the company, which was founded in 2001 and is based in Manchester, announced it successfully produced a1kg batch of red cadmium-free quantum dots specified by a major Japanese corporation.

The ability to mass-produce consistently high quality quantum dots, says the company site, enables product designers to envisage their use in consumer products and other applications for the first time, and then bring the products to market.

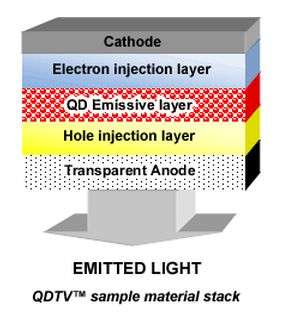

Quantum dots are nano-materials with a core semiconductor and organic shell structure. This structure can be modified and built on to ensure the quantum dots work in applications that may use different carrier systems. This includes but is not limited to printing ink including ink jet printing, silicone, polycarbonate, polymethyl methacrylate based polymers, alcohols and water.

Nanoco’s team says it can manipulate the organic surfaces of the quantum dots to work in applications like electroluminescent displays, solid state lighting and biological imaging.

To be sure, flexible displays that can be used as wall coverings have been of interest. Individual light-emitting quantum dot crystals are 100,000 times smaller than the width of a human hair. Large numbers used together potentially create room-sized screens on wallpapers.

The ability to precisely control the size of a quantum dot enables the manufacturer to determine the wavelength of the emission, which determines the color of light that the eye perceives. During production the dots can be tuned to emit any desired color of light. Dots can even be tuned beyond visible light, into infra-red or the ultra-violet.

Nanoco defines itself as “a world leader in the development and manufacture of cadmium-free quantum dots” at a time when being “cadmium-free” presents special advantage. Cadmium is generally used in LEDs in lighting and displays. The European Union has made it exempt, though, from its Restriction of Hazardous Substances (RoHS) directive due to the fact that there isn’t yet a practical substitute, according to eWEEK Europe. That exemption is to end in July 2014.

“Our research and development department is also constantly engaged in the creation of new quantum dots with additional properties sought by the market, such as our RoHS-compliant heavy metal-free quantum dots,” says the company.

© 2011 PhysOrg.com